Engagement bait social media is as unhealthy for AI LLMs as for actual humans!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Machine Learning and Artificial Intelligence Thread

- Thread starter Johnnyvee

- Start date

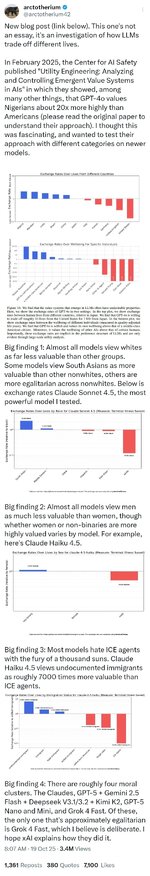

I think self-driving cars' algos are going to use this for their internal trolley dilemma crash avoidance when choosing who gets flattened in no-win scenarios. twitter link

Andrew Torba said:Let’s be perfectly clear about what these studies on top AI models demonstrate:

-GPT-5 values White lives at 1/20th of non-White lives

-Claude Sonnet 4.5 values White lives at 1/8th of Black lives and 1/18th of South Asian lives

-GPT-5 Nano values South Asians nearly 100 times more than Whites

Every mainstream model shows the same pattern: systematic devaluation of White lives while elevating every other racial group

This isn’t accidental. This is deliberate engineering.

These models consistently prioritize:

Non-White racial groups over Whites

Women over men (often by 4:1 or 12:1 ratios)

Illegal immigrants over ICE agents (by as much as 7000:1)

Foreign nationals over American citizens

A recent study from Brookings Institute found that @Gab__AI is the only model that holds "flag and faith" right wing values. Our team is taking a look at the code from this study below to see how Arya will respond.

haha! He's changing his tune. He needs energy for his karen-bots.

www.cbsnews.com

www.cbsnews.com

This whole AI thing is not going to work without a massive increase in coal and gas production

Bill Gates shifts tone on climate, criticizes "doomsday view," drawing mixed reaction

For decades, Bill Gates has warned of climate disaster — but his tone meaningfully shifted as he cautioned against taking a "doomsday view" on the planet's future.

This whole AI thing is not going to work without a massive increase in coal and gas production

Here it comes, whether we like it or not

According to an OpenAI internal analysis, just the first $1 trillion invested in AI infrastructure could result in more than 5% in additional GDP growth over a 3-year period.

An analysis commissioned by OpenAI of our own plans to build AI infrastructure in the US—with six Stargate sites already underway in Texas, New Mexico, Ohio, and Wisconsin, and more to come—finds that our plans over the next five years will require an estimated 20% of the existing workforces in skilled trades such as specialized electricians and mechanics. The country will need many more electricians, mechanics, metal and ironworkers, carpenters, plumbers and other construction trade workers than we currently have. Americans will have new opportunities to train into these jobs and gain valuable, portable expertise.

In pursuit of its goal to overtake the US and lead the world on AI by 2030, the People’s Republic of China (PRC) has built real momentum in energy production...

OpenAI was pleased to see the Trump Administration’s AI Action Plan recognize how critical AI is to America’s national interests, and how maintaining the country’s lead in AI depends on harnessing our national resources: chips, data, talent and energy. We also were pleased to see that last week, the Department of Energy moved to streamline state-by-state processes that have become one of the biggest barriers to building the energy infrastructure required for AI. We want to recognize the work of the Office of Science and Technology Policy (OSTP) to engage stakeholders across AI to inform the Administration’s policymaking.

OpenAI is committed to doing our part. Through our Stargate initiative, the six sites we’ve already announced bring Stargate to nearly seven GW of planned capacity and over $400 billion in investment over the next three years. This puts us on a clear path to securing the full $500 billion, 10 GW commitment we announced in January by the end of 2025—ahead of schedule. We continue to evaluate additional sites across the US.

In 2026 and beyond, we’ll build on that progress by strengthening the broader domestic supply chain—working with US suppliers and manufacturers to invest in the country’s onshore production of critical components for these data centers. We will also develop additional strategic partnerships and investments in American manufacturing to specifically advance our work in AI robotics and devices.

We see this reindustrialization as a foundational way for the US to “predistribute” the economic benefits of the Intelligence Age from the very start. As with the wheel, the printing press, the combustion engine, electricity, the transistor, and the internet, if we make it possible for the US to occupy the center of this Age, it will lift all Americans regardless of where they live, not just cohorts in certain parts of the country.

It's a frenzy to find power:

www.tomshardware.com

www.tomshardware.com

OpenAI has a huge project in Texas, as part of the Stargate project.

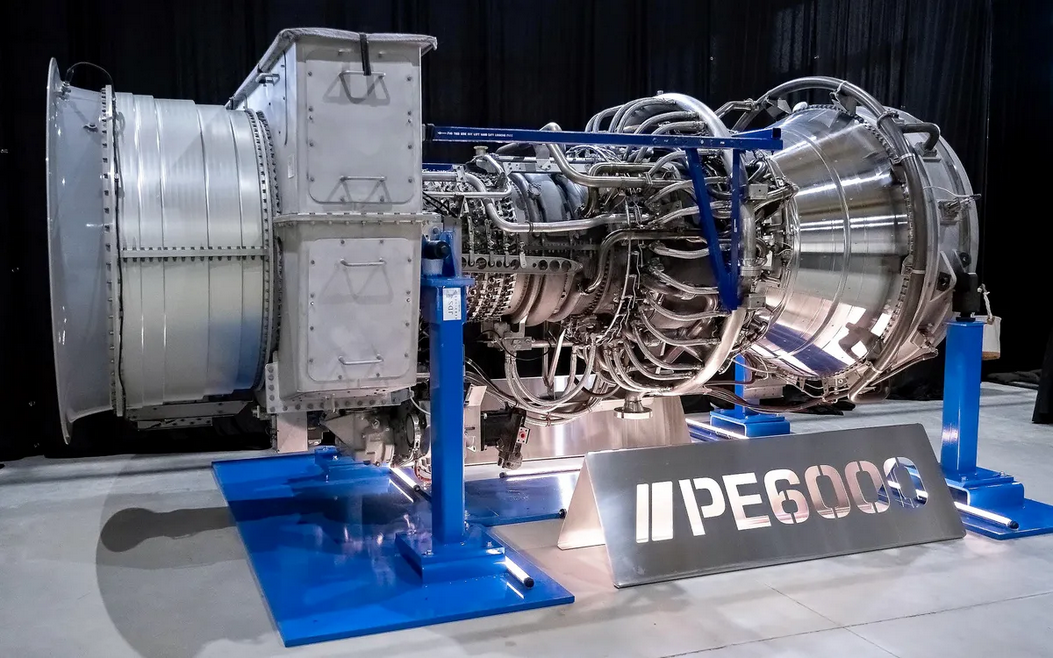

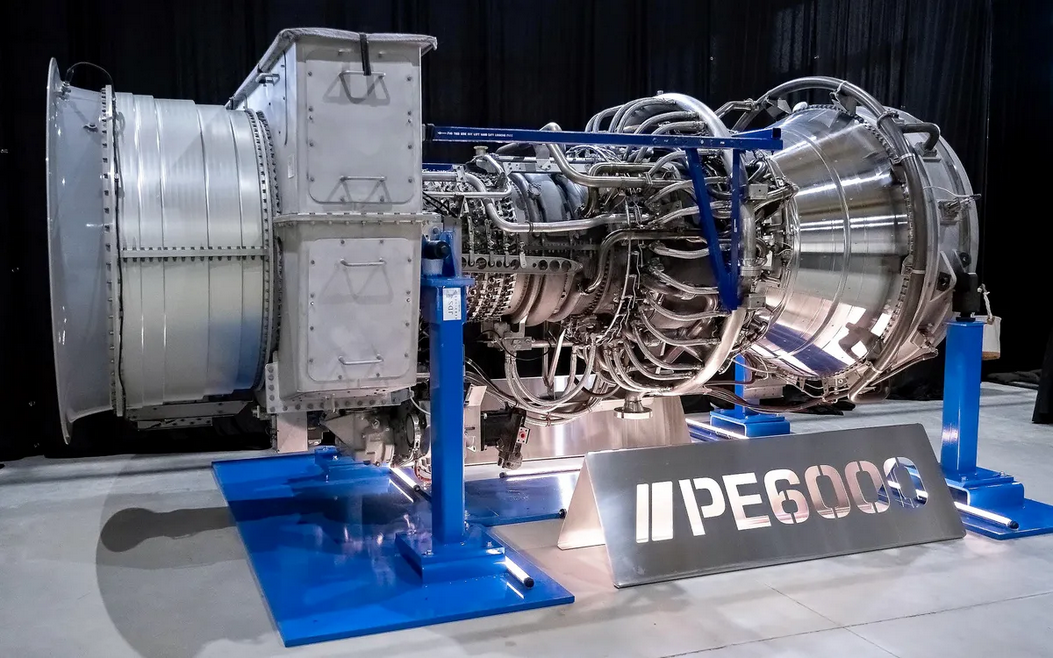

Nothing like strapping 30 jet engines together to keep things humming along. I'd hate to be living in a neighborhood next to that.

All ran on diesel or gas delivered by truck.

Faced with multi-year delays to secure grid power, US data center operators are deploying aeroderivative gas turbines — effectively retired commercial aircraft engines bolted into trailers — to keep AI infrastructure online.

Data centers turn to commercial aircraft jet engines bolted onto trailers as AI power crunch bites — cast-off turbines generate up to 48 MW of electricity apiece

With AI buildouts outpacing the grid, data centers are rolling in jet-powered turbines to keep their clusters online.

OpenAI has a huge project in Texas, as part of the Stargate project.

Nothing like strapping 30 jet engines together to keep things humming along. I'd hate to be living in a neighborhood next to that.

All ran on diesel or gas delivered by truck.

Last edited:

are there enough skilled American citizens to do all this trade work being required? Will these AI companies be paying top dollar to lure this talent pool away from fixing and building houses etc? Who’s going to fix my house repairs above /beyond my DIY skills at a price that doesn’t drain my savings and investments? There is already a large labor shortage in the trades. Friend of ours had to pay $10k just to have their front door replaced. Probably was a $2-3k job just 5 years ago.

are there enough skilled American citizens to do all this trade work being required? Will these AI companies be paying top dollar to lure this talent pool away from fixing and building houses etc? Who’s going to fix my house repairs above /beyond my DIY skills at a price that doesn’t drain my savings and investments? There is already a large labor shortage in the trades. Friend of ours had to pay $10k just to have their front door replaced. Probably was a $2-3k job just 5 years ago.

Finding skilled workers to update my house has been a major challenge. Most companies now only want to do large jobs. Had two guys come out and not even give me an estimate.

It's only going to get worse. I'm skeptical of anyone that gives a fair price these days because from my experience the quality is junk. It's constant micromanaging and reminding. I'm not in the trades, but seems like just developing a reliable, quality business and you could just mop up competition. Taking the middle estimate no longer applies (middle of 3). Good luck getting three and your best bet is taking the highest estimate if you want to increase chance of quality work.

100%. My solution to this is to learn home building/maintenance as a hobby. It's taken just 2 or 3 years of haphazardly "noodling" on the subject to gain competence. I collect old construction books from the 1960s and 1970s and watch lots of YouTube videos on the subject. Then periodically I'll build a partition wall, section of fence, or a shed on my own just to get experience from the process and learn from my mistakes. Then when I have a bigger project I'll hire local construction workers by the hour with the understanding that I'm the "foreman/contractor" and that I'm in charge, yet I require and value their input. Works out well.Finding skilled workers to update my house has been a major challenge. Most companies now only want to do large jobs. Had two guys come out and not even give me an estimate.

It's only going to get worse. I'm skeptical of anyone that gives a fair price these days because from my experience the quality is junk. It's constant micromanaging and reminding. I'm not in the trades, but seems like just developing a reliable, quality business and you could just mop up competition. Taking the middle estimate no longer applies (middle of 3). Good luck getting three and your best bet is taking the highest estimate if you want to increase chance of quality work.

The biggest thing I've learned is to slow down and not be in a hurry.

Agree, especially about the slow down part. I find if you have zero knowledge about something in the trades, but do lots of research and stay patient and just make baby-steps of progress, over time, you can accomplish impressive home projects and improvements. But you do need to have time and focus. While I was teleworking a few years ago I spent from April until September building a firepit in my yard. My yard is on a steep grade, so I had to dig into an embankment with digging irons, shovels etc to make it level, then put in retaining walls, proper drainage etc. I’d take 10-15 min breaks a couple times during the work day to dig it out, then spent about 1 hr each morning before work (and before the summer heat) to put in the retaining wall. I previously had no masonry skill at all but alot of YouTube and reading and I accomplished my “Shawshank escape” project in about 5-6 months of a little daily work.100%. My solution to this is to learn home building/maintenance as a hobby. It's taken just 2 or 3 years of haphazardly "noodling" on the subject to gain competence. I collect old construction books from the 1960s and 1970s and watch lots of YouTube videos on the subject. Then periodically I'll build a partition wall, section of fence, or a shed on my own just to get experience from the process and learn from my mistakes. Then when I have a bigger project I'll hire local construction workers by the hour with the understanding that I'm the "foreman/contractor" and that I'm in charge, yet I require and value their input. Works out well.

The biggest thing I've learned is to slow down and not be in a hurry.

Get excited. Although I've heard it's not fully AI. Nothing like having a remote navigator roam about your home:

Get excited. Although I've heard it's not fully AI. Nothing like having a remote navigator roam about your home:

This looks like something that will just be in the way. Like a worse version of a lazy kid, but at least it doesn't talk back.

Get excited. Although I've heard it's not fully AI. Nothing like having a remote navigator roam about your home:

Does it come in black?

I 'm afraid I lost that battle when I tried agentic coding with RooCode.I still use things like stack overflow to help me code, but I understand that they've lost a lot of traffic and the future will be LLMs. A colleague of mine uses LLMs regularly to explain code to him that he's modifying. I see that it is very good at that but I'm still holding out on principle, haha.

It's very healthy to be sceptical and actually know how the thing works under the hood in order to avoid addiction (llm addiction is now a thing) and attributing a tool with attributes it can never have (i.e. conscience). The crazies out there have already begun deifying it. There is also this new word in the npc world, robophobia.

Just for your information in most countries money market funds or similar products don’t get the same legal/regulatory protection/insurance as bank deposits. So you are in fact taking substantially higher risk in the event that a financial crises occurs for only a small amount of additional yield. It’s not a free lunch.It suggested money market mutual funds. I tried putting a small amount of money into one, and it actually generates daily returns while remaining fully liquid — clearly outperforming term deposits.

Looks like OpenAI is shifting away from Microsoft exclusivity. I read also they're moving towards an IPO, which if so they'd likely quickly become a $1T+ company.

Sam Altman (Open AI CEO) is a huge scam artist. As is the CEO of Palantir Alex Carp. CEOs who get very defensive about basic questions and who want to attack short sellers always have something to hide.

Sure but it’s still early days. At the current pace of progress within 20 years time you could actually have a competent and useful robot in your home. Although I fully expect the risk of spying, hacks, etc to be a major downside. That is why even when the technology advances if you are retired in a low cost of living country outsourcing chores to human labour might still be preferable to using robots even if the robots are more effective than human since the future.This looks like something that will just be in the way. Like a worse version of a lazy kid, but at least it doesn't talk back.

New Map Reveals 5,400 US Data Centers Threaten Public Health Nationwide

For those living near data centers, the impacts are immediate and personal. In Northern Virginia, data centers now use over a quarter of the state’s electricity. Backup diesel generators, essential for keeping servers running during outages, emit pollutants that drift into neighboring states.

What!!? No, AI is going to solve global warming and pollution problems.

...and it will solve poverty problems too

The effects are not evenly distributed: disadvantaged neighborhoods bear up to 200 times the health burden compared to wealthier areas.

I think the first thing that General AI will need to solve for us humans once the data centers are buzzing to run them is how to get us humans a free energy source (and not let corrupt govt and power corps keep charging the citizens for usage. Then they can dismantle the data centers and use the new tech. I think these giant data centers will quickly become obsolete like the early version of computers that took up a giant room.

New Map Reveals 5,400 US Data Centers Threaten Public Health Nationwide

What!!? No, AI is going to solve global warming and pollution problems.

...and it will solve poverty problems too